Docker swarm

This summer @megamsys we started implementing micro services (containers) in baremetal for our customer and our public service in beta

The market largely is about 4 kinds + emerging unikernel owing to some of the issues posed on security by the famous docker

-

Containers in a Virtual machine (VM). Everybody uses a fancy terminology, and we call it a

DockerBox. The is by far the easiest to do, since you have isolation handled already inside a VM. -

Improvements to containers like Rocket, Flockport,RancherOS, Kurma, Jetpack FreeBSD, systemd-nspawn using LXC or systemd-nspawn or custom build.

-

Container OS like Project atomic, CoreOS, Snappy, Nano server - Guess who?, photon ? VWware uggh! which helps to run containers inside it.

-

Containers in baremetal Install and run containers on bare metal as this provides profound performance.

The container market is consolidating with Big brother docker taking the lead in coming up opencontainers.org. But we believe docker has the undue advantage as their standard will be superimposed as noted in the CoreOS.

The emerging one are the Unikernel or library kernel which run just one app on top of a hypervisor. This is secure and is still nascent. we would eventually like to suppor the above.

Containers in a VM cluster or CoreOS like

| Who | Link | Where |

| Google container engine | VM | |

| Elasticbox | Elasticbox | VM |

| Panamax by centurylink | Panamax | CoreOS |

| Apcera by Ericsson | Apcera | Kurma |

| Engineyard (DEIS) | Deis | CoreOS |

| Profitbricks | Profitbricks | Bare metal *maybe |

| Krane | Krane | VM |

| Joyent Triton | Triton | Don't know |

| Shipyard | Shipyard | Onpremise bare metal |

| Docker machine | Docker | VM |

| Openshift | Atomic | VM |

| Rancher | Rancher | Onpremise bare metal |

| Cloudfoundry | Garden | Don't know |

| IBM Bluemix | Bluemix | Don't know |

| Openstack | Openstack - Docker | Don't know *confusing |

| Cloudify | Cloudify | Don't know |

| Photon VMWare | Photon | CoreOS like - propretitory |

| runC (new kid) | Opencontainers | Opencontainer - baremetal |

The funny thing in the above take is how everybody royally screws Openstack in getting into containers when it doesn’t need to.

Anyway last year we had docker running inside a virtual machine. In our hackathon we demonstrated running containers inside managed VMs.

But containers are best utilized on baremetal.

We needed a way to run it inside bare metal.

Stay with me, Yes we are warming up with the problem now

We need a reliable way to run containers in a datacenter with various clustered hosts

What do you mean ?

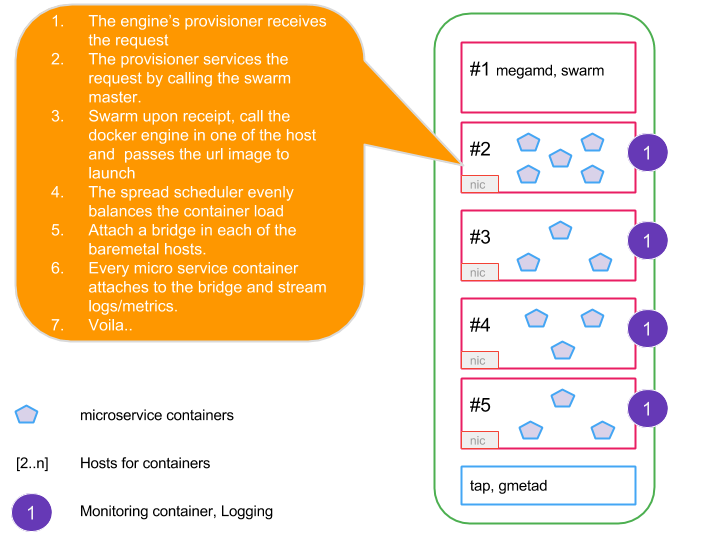

A picture is worth a 1000 words.

To do that we need schedulers that can orchestarte and compose containers. We use the term micro service and containers in an interchangeable way, they both mean the same.

Containers orchestrated by schedulers

Lets look at the orchestration for on premise baremetal cloud.

In a unanimous way most companies choose

| Who | Link | Orchestrator |

| Openshift | Origin | Kubernetes |

| Techtonic | Techtonic - CoreOS | Kubernetes |

| Cloudify | Cloudify | Don't know |

| Docker | Docker compose | Fig (Docker compose) |

| Rancher | Rancher | Don't know |

| Panamax by centurylink | Panamax | Kubernetes |

| Cloudfoundry | Garden | Don't know |

Most vendor use the containter orchestration using Docker compose [fig] or Kubernetes.

Well at Megam as seen from the picture we have own omni scheduler built using golang

| Who | Link | Orchestrator |

| Megam | megamd | Megam |

Diving into the problem

We need a reliable way to run containers in a datacenter with various clustered hosts

Docker swarm

We started looking at docker swarm, it sounded so sweat that you can have run swarm and just join new docker engine into our “dockercluster” on the go as your datacenter nodes expand.

###No we were plain WRONG.

Why ? Since if you visit our architecture, we had docker engines running in bunch of servers, and a swarm cluster being formed. The swarm master will talk to all the docker engines and provision containers on bare metal in a load balanced way in all the hosts.

Eg:

- As a developer0 lets say i submitted the first container from [our public beta developer edition - console.megam.io](https://console.megam.io] - Oh yeah you have an onpremise edition from a marketplace

- Similarly developer1 - developer2 submit concurrently to the swarm cluster

- swarm needs to spread and schedule/load balance the containers on all the hosts equally.

Whereas the current swarm is broken by having a mutex lock to a variable that just waits until the first container that you submitted is complete.

In the essence it becomes like a serial operation to submit containers.

We poked the code high, and found this in the code as fixed here megamsys/swarm. This may be left intentionally as docker intends to support Apache mesos

// CreateContainer aka schedule a brand new container into the cluster.

func (c *Cluster) CreateContainer(config *cluster.ContainerConfig, name string) (*cluster.Container, error) {

//*MEGAM* https://www.megam.io remove the mutex scheduler lock

//c.scheduler.Lock()

//defer c.scheduler.Unlock()

{<2>}

Once we fixed the above code and packaged swarm, it worked like a charm.

We believe Docker doesn’t want to open up its default scheduler but force people to use Mesos. We at Megam are allegic to Java (JVM) as it bloats too much memory and we use judicially.